Start

ComfyUI is the standard for running diffusion models on your own hardware, akin to how llama.cpp and vLLM are for LLMs.

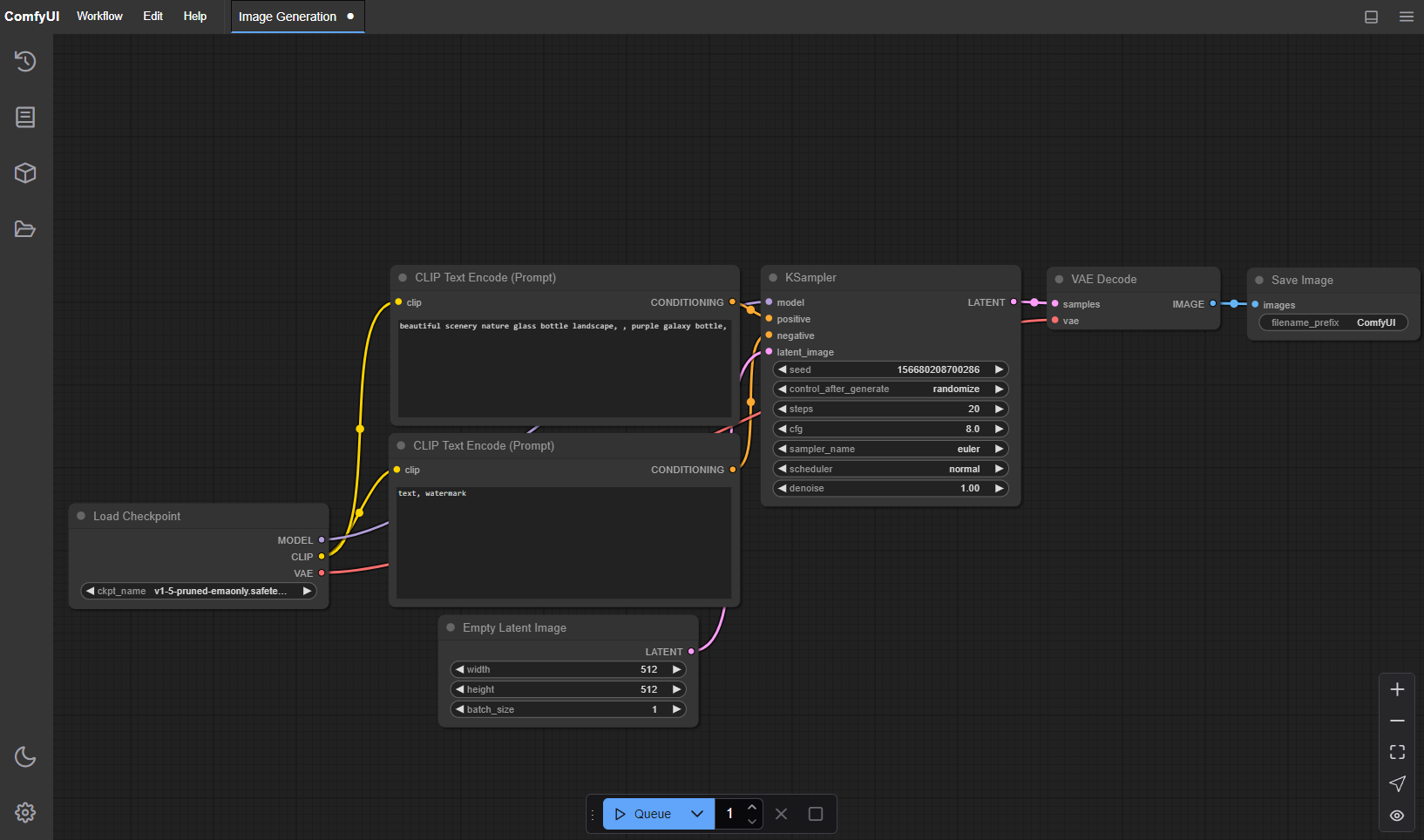

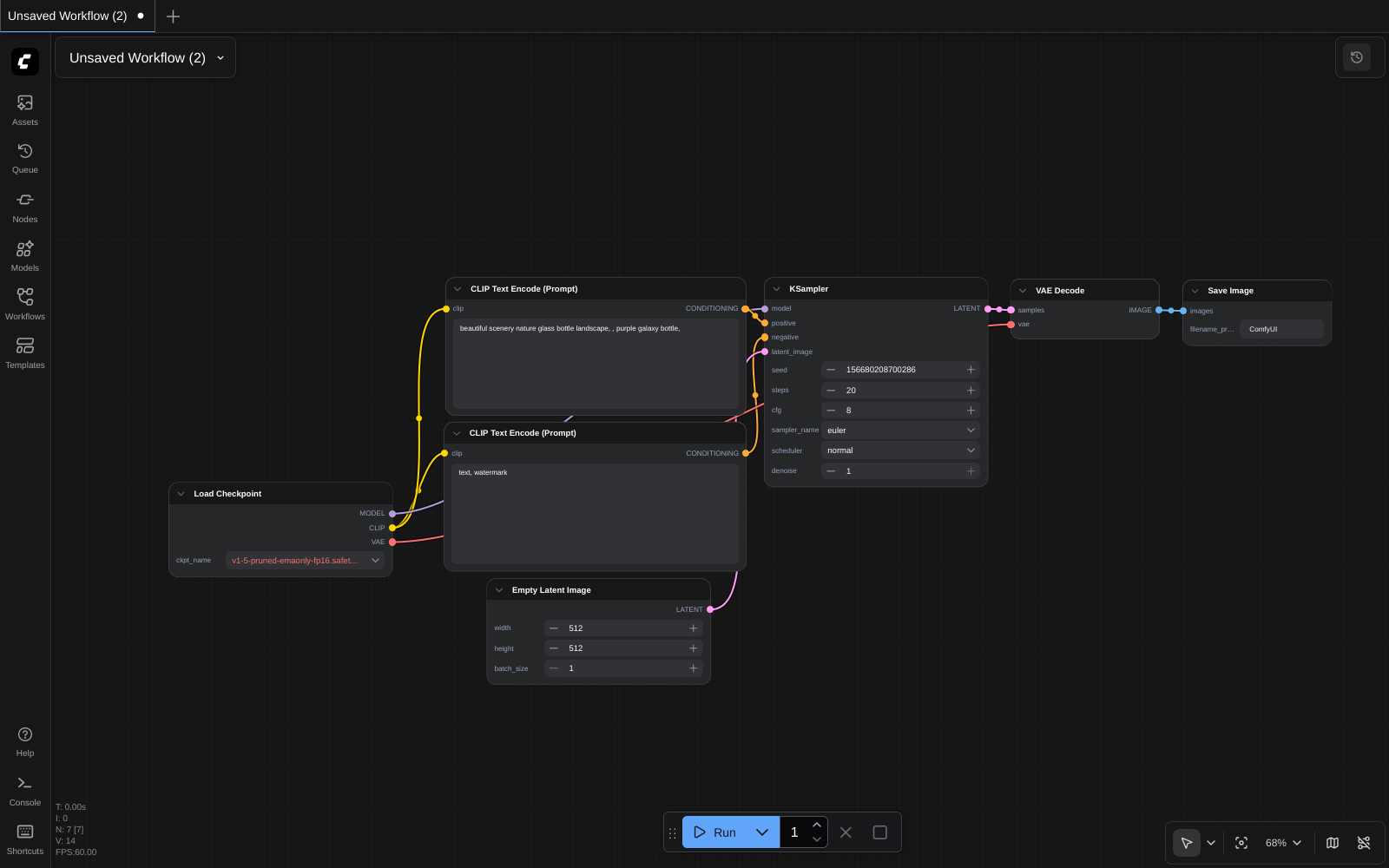

It uses a node-based workflow system to load models, images, encode prompts, manipulate outputs, etc.

It supports both ROCm for AMD GPUs and CUDA for Nvidia GPUs, and CPU offloading.

This guide will show you how to setup and run the new Z Image Turbo model.

Download

You’ll need to first install Git, Python, and your GPU’s compute library (ROCm/HIP for AMD, CUDA for Nvidia).

First, clone the ComfyUI repository.

git clone https://github.com/comfyanonymous/ComfyUI.git

cd ComfyUIThen, we’ll setup a venv so that we can install dependencies in a clean way. If you know how to, you can use another venv manager if you’d like.

python -m venv venv

source venv/bin/activate # If you're using fish, use the file activate.fish insteadNow, we must install the python dependencies for your GPU.

AMD (ROCm)

pip install torch torchvision --index-url https://download.pytorch.org/whl/rocm6.4Nvidia (CUDA)

pip install torch torchvision --index-url https://download.pytorch.org/whl/cu130And finally, install the remaining ComfyUI’s dependencies from the requirements.txt:

pip install -r requirements.txtUse

Note: If you’re using a technically unsupported AMD GPU, you may need to include a special environment variable. On my RX 6600 XT, I needed to add HSA_OVERRIDE_GFX_VERSION=10.3.0.

Start ComfyUI.

python main.py

--listen 0.0.0.0 # (Optional) this lets other devices on the network access ComfyUI

--preview-method auto # (Optional) this gives previews as the images generateIf all goes well, you should see console output like this:

Context impl SQLiteImpl.

Will assume non-transactional DDL.

No target revision found.

Starting server

To see the GUI go to: http://0.0.0.0:8188You can now open ComfyUI by going to http://localhost:8188. From here, you’ll probably be met with a graph of nodes titled “Unsaved Workflow”.

Clicking “Run” will not do anything, since we don’t have any models installed. Let’s try Z Image Turbo!

I’m actually not going to download straight from there. I’d recommend downloading a quantized version, as it has a miniscule impact on quality while greatly improving memory efficiency and speed.

Diffusion model

First, download the diffusion model. We’re going to use an fp8 version. This model does the actual denoising process, getting us an image.

Place this file in ComfyUI/models/diffusion_models.

Text encoder

Second, download the text encoder. This, too, is quantized to fp8. Z Image Turbo uses Qwen3 4b as the text encoder. This processes the prompt you enter into embeddings for the diffusion model.

Place this file in ComfyUI/models/text_encoders.

VAE

Finally, download the VAE. I’d recommend renaming this to zimageturbovae.safetensors just for clarity. This encodes and decodes images to and from latent space.

Place this file in ComfyUI/models/vae.

Workflow

Finally, to use the model, we need a workflow. This is a combination of nodes that can take in a prompt and result in an image output.

Drag this link into the ComfyUI web UI to get the workflow.

Put your prompt into the CLIP Text Encode (Positive Prompt), and press “run”!

On my hardware, it took 100 seconds to generate. Yours may be faster or slower.

You’ll find your outputs at ComfyUI/output.